For the entirety of the modern era, power was human.

Economic strength meant people. More workers. More soldiers. More votes. Seapower built empires. Airpower changed war. Firepower — and then nuclear power — enforced order on the world.

Each came with its own infrastructure. Its own logic. Its own elite. But all of it relied on manpower: every factory, every fleet, every war room.

Minds to design its systems. Coordination to build its machinery. Hands to operate its levers. Instinct to command its movements.

That world is ending.

Mass automation and agentic AI are quickly severing the link between power and people. Machines are no longer passive tools. Instead, they can now reason, coordinate, and decide for themselves. Multi-agent systems work together as actors, negotiating objectives, strategising across domains, and taking decisions in environments that once demanded human oversight.

Across industries, white-collar roles are being hollowed out, replaced not by workers, but by agentic workflows. At BT, the UK’s largest telecoms provider, chief executive Allison Kirkby has openly stated that AI could allow the company to shrink even further than planned. The company is already cutting up to 55,000 jobs by 2030 but, as Kirkby puts it, “Depending on what we learn from AI… there may be an opportunity for BT to be even smaller by the end of the decade.”

Others have drawn similar conclusions. At IBM, Klarna, and Duolingo, layoffs are dressed up as “efficiencies,” “restructures,” or “adjustments to macro conditions.” The language is soft. The consequences aren’t. Ocado now picks orders in half the time it did a decade ago — with 500 fewer workers. This is the lay of the land. Any executive not deploying AI today isn’t being cautious, they’re being negligent.

Ironically, blue-collar work (long assumed to be first in the firing line) is proving more resilient for now. But advances in robotics, driven by AI breakthroughs like world models, are rapidly pushing machines into the physical world too.

The Intelligence Age isn’t going to stop at the keyboard.

In many ways, we have already crossed the Rubicon. It is no longer who does the work. It’s whether we do at all.

Some will call it displacement. Others will call it progress. In reality, it's both. Jobs are being redesigned around AI: less repetition, more oversight, more value placed on human judgment, creativity, and empathy. What makes us us is what will save us is the cross-party line.

But if we were to be honest with ourselves, this is but a shoestring stopgap. Hope dressed as certainty. A fragile answer to a far deeper and more pressing issue.

Because the question is no longer about whether AI will change work — it already has. Nor is it even what happens next (many of us are already learning what it means to be ‘the human in the loop’).

Instead, the underlying question is what happens when the human in the loop becomes the liability, when machines no longer support human judgement, creativity, and empathy but surpass them?

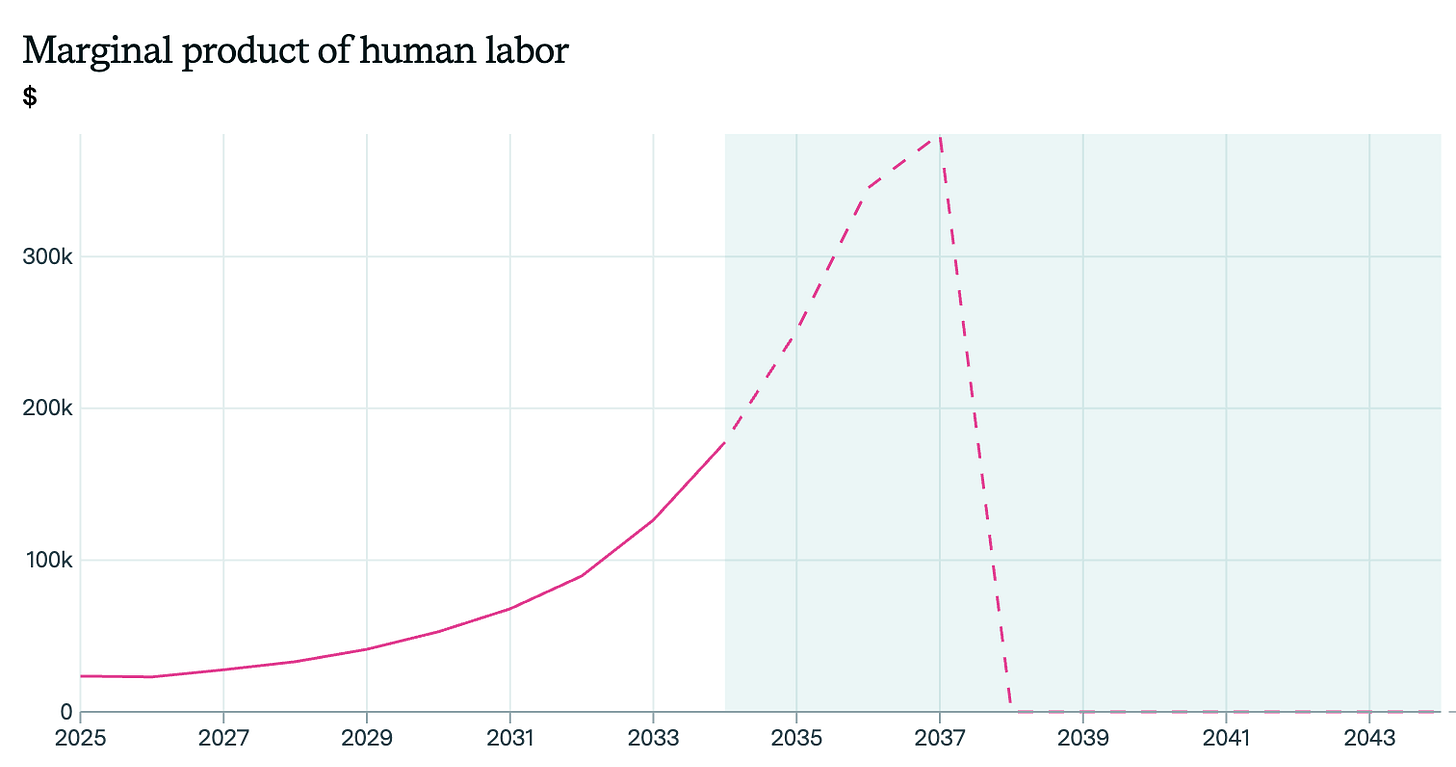

While many still dismiss it as far-fetched, the people building this technology don’t. They believe AI will soon outperform humans at nearly every economically valuable task. If they’re right — and the signs are increasingly hard to ignore — wages won’t just stagnate. They’ll collapse. Not because of mass automation at scale, but because an unstoppable tide of digital labour, with near-zero marginal cost, will render human work obsolete by sheer, ruthless efficiency.

That day is fast approaching, and with it the centre of gravity shifts: productivity decoupled from participation.

When this happens, we will find ourselves very far off of the reservation indeed.

If we were to attempt a historical viewpoint of our current trajectory, we might look to the economist Joseph Schumpeter. It was Schumpeter who coined the term ‘creative destruction’ — the process by which new innovations dismantle old industries to make way for the new.

In the past, this cycle displaced workers but ultimately reabsorbed them. Farmhands became factory workers. Factory workers moved into services. Each time, the system adjusted, reinvented, re-employed. However thanks to AI’s capabilities, this time there’s no obvious landing zone.

“It does seem like the balance of power between capital and labo[u]r could easily get messed up, and this may require early intervention.”

Sam Altman, 2025

Schumpeter warned that capitalism might collapse not from crisis, but from success. In Capitalism, Socialism and Democracy, he foresaw a world where capitalism’s victories bred a new class of salaried intellectuals and bureaucrats — people who owed everything to the system, yet believed in nothing it stood for. Not revolutionaries. Managers.

I would argue we already live in the world he described. The Leviathan is bloated. Society is saturated in bureaucracy. The system still moves, but no one remembers why.

With AI now in the mix, it’s no longer clear that this sorry situation will hold. What if creative destruction doesn’t exhaust society — but outruns it? What if AI doesn’t just displace workers temporarily, but removes them permanently and faster than new categories of work can emerge? What — to reference another mid-20th-century economist — if this wave never crashes?

The traditional responses, whether capitalist retraining or socialist organising, fall short for the simple reason that they both assume a world where manpower matters.

"Workers of the world" only works when there are workers. Upskilling doesn’t make sense when your colleague is machine with the world's knowledge at its (potentially limitless) fingertips. Strikes and unions were designed for a world where you could throw down your tools and strike if your needs were not met.

But AI is not a tool. It doesn’t assist — it replaces. In the wake of machine-scale, agentic productivity, these proposals feel like holdovers from a bygone era. Rituals from a world when the system still needed us.

It is not clear if this world still exists. Or if it does exist, how much longer for. This is the reality of our present situation.

We’ve already built machines that turn weeks of labour into hours — and they do it again and again, without rest. These systems won’t plateau. They will only improve in their capabilities. In this scenario — our current one — the cost of labour will not decline gradually. It will fall off a cliff.

For now, humans remain bottlenecks. Not everywhere, but in enough places to hold back full automation. Tasks requiring physical dexterity, emotional nuance, or legal sign-off still depend on us. And building AI still demands rare talent and significant resources. But these are speed bumps, not walls, therefore the question remains: what becomes of a society when it no longer needs us?

Yuval Noah Harari once called this the emergence of a useless class. A population no longer required for economic production. This is not doom. It’s design. AI is built for efficiency and will deliver it spectacularly. But with efficiency comes consolidation. Not just of capital, but of power — because people power only works when there are people with power.

In the emerging world where productivity is split from participation and automated warfare, it is unclear where this power will come from. This is one of the most pressing and existential questions of the present moment.

In this new world, those who design and own the models, the compute and the infrastructure will be the humans in the loop. The majority won’t.

Despite clarity of our current trajectory, the official narrative remains unchanged: AI is just another General Purpose Technology, another K-wave. Like steam. Like electricity. A neutral input. Something to be harnessed that can never truly replace us.

Keir Starmer echoed this almost verbatim at London Tech Week — describing AI as a tool to “make us more human,” a way to “transform services” and “speed up planning decisions.” His vision is one of frictionless upgrade: more efficient hospitals, more personalised lessons, fewer planning delays. A better bureaucracy, running faster.

“This is about improving the lives of working people.”

Keir Starmer, London Tech Week 2025

In many respects, the Prime Minister is right. I’ve covered this space for a decade. AI is already transforming every sector — drafting law, forecasting markets, designing new materials (we all know the drill at this point). The potential for AI to do good in the world is limitless, and the speed in which it is doing so is blinding.

Just last week, a new antibiotic was discovered in three days, the first breakthrough of its kind in more than thirty years. The week before it was announced that the first multi-agent AI system identified, tested, and explained a novel treatment for blindness. Not too long ago this was the stuff of sci-fi. Now it is reality. I am simply putting forward questions about what the next scifi-turned-reality will be once these tools improve.

Because they will improve — are improving — and in doing so, will almost certainly trigger a golden age: democratising knowledge, collapsing the cost of creativity, and accelerating discovery at a pace no civilisation has ever experienced.

This is why this revolution will be adopted everywhere. When the results are this good, the debate can no longer be about whether to adopt, but how soon, and how totally.

As Starmer made clear in his speech, this is a technology that will touch every part of government. “AI was absolutely central,” he said of the government’s new Strategic Defence Review. “The way war is being fought has changed profoundly” — and he wanted that shift “hardwired” into national strategy.

He spoke of partnerships with Big Tech. Of planning reforms to fast-track data centre construction. Of Britain becoming “an AI maker, not just an AI taker.”

To myself, and to many, this is music to the ears. The Intelligence Age couldn’t have come at a better time. Decades of stagnation and cultural erosion have brought us to a moment of reckoning. The old order, propped up by bureaucratic inertia, has long passed its sell-by date. Societies drift. Institutions stall. Something was always going to break.

And across the globe among governments, think tanks, tech firms and parties of both left and right a consensus is forming: AI is going to do the breaking.

Now this isn’t about being pro-AI or anti-AI. It’s about clear-eyed analysis of the Great Change that is already underway — not hypothetical, not future tense, but happening. Some of it will be good. Some of it won’t. That depends entirely on your own view and values.

But we must be honest about the realpolitik of tech.

This is not steam. It is not fire. It is not electricity.

Fire does not decide. Steam does not act. Electricity does not wield.

AI does — and so much more.

So this is not another upgrade. It is a shift in kind.

And that is the key difference.

“I would define winning as the whole world consolidates around the American tech stack.”

David Sacks, Trump's AI Czar.

When looked at this way, it becomes clear that nations are no longer innovating, they are arming.

The future is being built in hardened data centres, cooled by rivers and powered by their own substations. Facilities the size of cities are appearing across the world — not just to serve the cloud, but to secure national advantage. These are not “tools,” and they are not “products.” They are instruments of sovereignty. Symbols of pure power.

Chips are being stockpiled like munitions. Models are being weaponised, banned, and fine-tuned to enforce worldviews. We are not witnessing another technological adoption curve. We are witnessing the emergence of a new theatre of war. A new arena of power. A new underpinning of society.

With AI, the promise is nothing short of an age of abundance: health diagnostics on demand, every child with a world-class tutor, creativity unshackled, services on tap. Every interface tailored to the task at hand. Every experience personalised. Designed for and with you, always.

But behind the glow of demo videos and ministerial speeches lies something else. Power is consolidating. Participation is shrinking. And sovereignty is being rebuilt — not in parliaments, but in data centres.

Private-state alliances are scaling. New geostrategic lines are being drawn. This is not just another wave of innovation. It is a redrawing of the global map in what already looks like a pre-war world.

This convergence of factors is what I am calling machinepower.

Machinepower (noun)

/məˈʃiːnˌpaʊə/

The emerging replacement for manpower.

Where machines assume the roles of workers, soldiers, and decision-makers.

A strategic capability.

The ability to generate, direct, and deploy artificial intelligence at scale.

A geopolitical contest.

A global race to build and control sovereign AI — including models, compute, and infrastructure. A new terrain in which power is asserted and reality shaped.

Machinepower is not just automation (that is part of its mechanism). Nor is it simply about disempowerment, though the superseding of manpower as the engine of society may be its most profound consequence. It is both of these things. And more.

Machinepower is the new basis of sovereignty. The new terrain of power. As seapower once defined empires, and airpower redrew the map of modern war, machinepower will determine who governs, who dominates, and who endures.

To anyone paying attention, the ability to generate, direct, and deploy intelligence at scale is fast becoming the most important strategic capability on Earth. States know this. So do companies. So should you.

“We can be an AI maker, not just an AI taker.”

Keir Starmer, London Tech Week 2025

Machinepower is not theoretical:

On May 29th, 2025, Anthropic’s CEO declared AI would eliminate half of all entry-level white-collar jobs within five years. LinkedIn’s top executives say the shift has already begun.

Sam Altman has stated that Meta is now offering a $100 million sign-on bonus to OpenAI staff in an attempt to pinch top talent.

Xiaomi, which started as a smartphone manufacturer uses over 700 AI-guided robots in its Beijing factory to produce a new electric vehicle every 76 seconds.

In partnership with defence startup Helsing, Swedish manufacturer Saab has successfully demonstrated the ability of an AI tool to cue a Gripen E fighter jet pilot to fire missiles in a combat scenario.

Palantir recently revealed the next phase of TITAN — an AI-powered combat vehicle developed with Northrop, Anduril, and L3Harris. It fuses satellite, drone, and sensor data to make battlefield decisions in real time — a glimpse into the next era of autonomous warfare.

Musk’s Department of Government Efficiency may have collapsed, but its core principle of DOGEism — that governance can be restructured around algorithms, interfaces, and optimisation — lives on. Across party lines and across the world, states are cutting headcounts, automating decisions, and reimagining public services with the help of Big Tech.

In October 2024, Biden administration National Security Adviser Jake Sullivan warned that the United States risked “squandering [its] hard-earned lead” if it did not “deploy AI more quickly and more comprehensively to strengthen [the country’s] national security.” And in one of its first executive orders, the second Trump administration declared its goal “to sustain and enhance America’s global AI dominance.”

David Sacks, Trump's AI czar, said last weekend on his podcast: "There's no question that the armies of the future are gonna be drones and robots, and they're gonna be AI-powered. ... I would define winning as the whole world consolidates around the American tech stack."

In February, the European Union announced plans to build four "AI gigafactories" at a cost of $20 billion to lower dependence on U.S. firms.

China has made AI central to its geopolitical strategy. It mandates foreign tech firms to partner with state-backed entities, harvesting IP at scale. Its AI firms — from surveillance to military applications — are flooding global markets with cheap exports designed to capture data and enforce influence.

In response, sanctions. Yet NVIDIA’s Jensen Huang says they’ve only accelerated China’s domestic chip ambitions — fuelled by state subsidies, labour discipline, and industrial patience the West no longer possesses.

In February U.S. prosecutors unveiled an expanded 14-count indictment accusing former Google software engineer Linwei Ding of stealing artificial intelligence trade secrets to benefit two Chinese companies he was secretly working for.

In The Technological Republic, Palantir CEO Alexander Karp called for a return to seriousness — a rearmament of both mind and machine. The software industry, he argues, must stop optimising for the trivial and once again serve the state.

Trump’s administration has merged Silicon Valley and Washington into one unified war machine. OpenAI, Scale, Palantir, Anduril — these are no longer companies. They are extensions of statecraft.

Meta has invested $14bn stake in Scale AI, a Pentagon supplier which is now actively recruiting for UK MoD contracts.

The Stargate Project alone will deploy half a trillion dollars invested in U.S. AI infrastructure — a reindustrialisation drive chaired by SoftBank, backed by Oracle and NVIDIA, and propelled by military-grade ambition to reach Artificial General Intelligence (AGI).

But even without AGI (once fringe, now explicit government policy) the systems that are already available today are enough to rewrite the logic of war, governance, work, and culture.

The questions therefore multiply faster than the answers:

Power is decoupling from participation. What happens when governance no longer needs citizens? Will robots pay taxes? What becomes of soldiers when wars are fought by machines?

Models now train on themselves — we're already out of that particular loop. What happens when the models no longer need us — or our data — at all? The copyright debate? An anachronism. Synthetic data has made it irrelevant. This is just one of many canaries in the coal mine that is totally misunderstood.

What if you want to stay in the loop? Will you enhance yourself — neurolinked, optimised, always-on? Not to get ahead, but simply to keep up?

When entertainment is not only curated but created for you— uniquely, endlessly, algorithmically — what remains of the shared story? Will culture survive, or splinter into a billion pieces?

What happens when we can no longer believe what we see online? The proposed solution (watermarking) is dead on arrival when no one trusts those doing the marking. Decades of distrust, seeded by legacy media and platform manipulation, have already certified this collapse.

Blackbox AI systems will compete for reality. Which will you trust to vet your world and fuel your services? The rainbow box of Silicon Valley? The bro-box of Grok? The red box of Chinese state control? Each is building a different future, and each will shape how you work, what you see, and what you believe.

These are only a few of the questions almost no one is asking and even fewer are answering. Machinepower is the frame I’ll use to explore such things — from infrastructure to ideology, from media to war, from governance to the self.

None of this will be hypothetical. It’s business strategy. It’s government policy. The world it portrays will happen (is happening) not because of malice, but because of the extraordinary good AI offers.

The future therefore will not be dystopia, but not a utopia either. It will look like — and will be — the spark of much-needed endless efficiency. A fusion of tech-bro DOGEism and Chinese state control: Chinafornication.

As a result, the Leviathan will finally be slain. But in its place, a new Behemoth will rise. Not democratic, not tyrannical — just vast, unknowable, and agentic. Rule by systems: where power is increasingly concentrated and processes are run autonomously by machines.

This is where I will try and follow this shift: the transfer of power from man to machine and its consequences.

Whether you’re a policymaker, a founder, or just trying to stay relevant — machinepower is the terrain you’ll be forced to operate on.

This space exists to map it.